Initialization Time in Programs

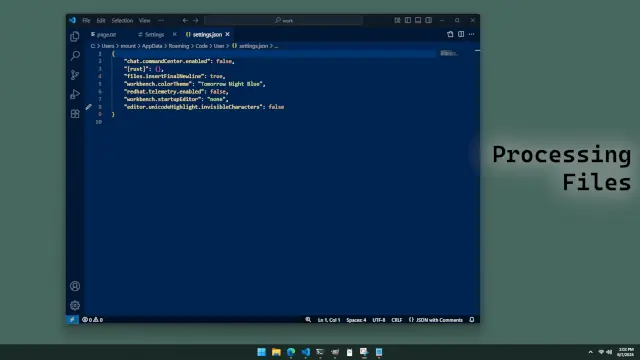

Suppose we have a program that must process a file. We can use different algorithms to process the file—one version processes data faster, but has a greater initialization time than the other. Which should we choose?

For a program that processes one small file, it is faster overall to avoid a high initialization cost. But if we have many files to process, or even a single large file, it is better to spend the time initializing the faster data structure.

The undecidability of software is one of my favorite things about it. One version is faster on large amounts of data, and the other on small amounts. In compiler theory, this is referred to as "undecidability"—it is impossible to tell which is better unless we consider all the possible use cases of the program.

But in my opinion, given the interest in "big data" in modern times, it is probably better just to pay the initialization cost—you might want to process a lot of data later (even if you don't know it yet).